This article examines five BGP case studies that illustrate the crucial vulnerability of the Border Gateway Protocol.

BGP is one of the key tools for achieving Internet connection redundancy. No other protocol can bring as much joy and freedom from care as BGP can. It is a glue bonding the entire Internet and enabling data communications between large networks operated by different organizations, but sometimes one awkward move can lead to blackout of a major part of the global network. Let us recall the most notorious and well-known BGP accidents bearing evidence of its vulnerability.

“AS 7007 incident”

This was the first-ever recorded global BGP accident and possibly the best known. It was just another ordinary day of 1997. Internet was in its infancy and the majority of providers fully trusted each other. Relay was opened on SMTP servers. There was a huge number of WEB-proxies and anonymous DialOut. People were concerned about the depletion of the IP addresses space, while network operators about CPU usage, which could barely support a complete BGP routing table, consisting of approximately 45 000 records. Suddenly, the Internet was no more. Operators and service providers around the world tried their best to identify the cause and they succeeded. All 1997 Internet focused in one point – cheap Bay Networks router in AS 7007. The cause of the problem was quickly resolved by disconnecting the defective router from the network. However, this solution did not help to resolve ongoing issue – the routers continued to fail throughout the Internet. How the announcements were sent out? Was there a way to rectify the problem at hand? Could the Internet, kept together by IRC and duck tape, be truly over? Within a few hours, the issue had been resolved and network operators throughout the world gathered to discuss the consequences of the accident and how it could have been prevented. It was a defining moment that changed the Internet forever. However, unlike many other changes, it went unnoticed by most users.

What happened to the AS 7007 on April 25, 1997?

Theory

BGP is a protocol, allowing one Internet network to inform another regarding its existence and networks that can be reached through it. They can also learn how to get to other networks on the Internet. Upon receiving BGP information, routers choose the best route to the destination network and add it to their routing tables. BGP uses several parameters to determine the best route. The most obvious one is the number of networks to the destination or length of the so-called AS-Path. The shorter the AS-Path, the better the route. Of course, this is just the essence of the theory, but you will see that it suffices. Another general rule – Longest Prefix Match. The route with the longest, more specific mask is preferable when a packet is being sent. In other words, if you see the announcements to the 130.95.0.0/16 network (i.e. 130.95.0.0 – 130.95.255.255) through the path A and the announcement to the 130.95.0.0/24 network (i.e. 130.95.0.0 – 130.95.0.255) through the path B, the traffic, going to any host of 130.95.0.0/24 network will follow the path B despite the fact that path A can be much shorter.

Accident Causes

This router was in AS 7007, learning full routing table through BGP. The reason for further events are shrouded in mystery (rumor has it that it was a router software bug). At some point, router began splitting all received routes to /24 and sending them back to its BGP neighbors. The entire Internet routing table, disaggregated to /24, spilled into the global network. After disaggregation to /24, AS-Path attribute got cleared, and it looked like all the networks are in AS 7007. Essentially, all the routers in the global network started sending all of their traffic to this AS. Internet relied on 45 Mb/s channel connected to 7007 AS. The root of the problem was quickly eliminated – the port on the failed router was disabled, which stopped fake announcements that were coming from it. Nevertheless all other routers were passing the previously obtained announcements in a loop. The “new” routing table consisted of 250 000 entries. Routers could not cope with this load, which is why they were rebooting, re-learning routes from the neighbors, passing them further, and again rebooting. Announcements scoured the Internet for several hours with the speed of light. Backbone providers solved the problem in the following way: they switched off all the equipment and ports, accomplished periodical reboot and added filters at the input to restore the internal network connectivity. Thus, after a few hours the balance was restored.

Pakistan blocked YouTube worldwide

The event that has not passed unnoticed, even for the new generation of users.

On February 24, 2008, the entire world lost access to most YouTube resources for an hour and a half. Essentially, this has happened because Government of Pakistan decided to block access to the video service due to the presence of the offensive videos posted there. Obviously, they went overboard and blocked IP addresses block, instead of blocking just URL or domain. Pakistan provider “Pakistan Telecom” went even further, depriving the entire world of YouTube.

Supposedly, they intended to route traffic to some of their internal resources, however they leaked routes to the “big” Internet. While YouTube announced /22 Network, Pakistan Telecom started sending /24 routes to his neighbor – PCCW provider. Considering previous BGP issues, PCCW should have restricted the announcements of his client, but unfortunately, they did not do it, which is why the false announcement successfully migrated within the network. In a span of three minutes, the entire Internet learned the new route.

Due to the fact that /24 route was more preferable than /22, because it was more specific (with a longer mask), practically the entire YouTube traffic was rerouted to it. This type of attack is called Hijacking. The attackers can do anything with the acquired traffic: drop, analyze for specific information, modify it as necessary and return to the network. However, judging by the fact that baffled representatives of Pakistan Telecom called in addition to all other PCCW customers, it was an honest mistake.

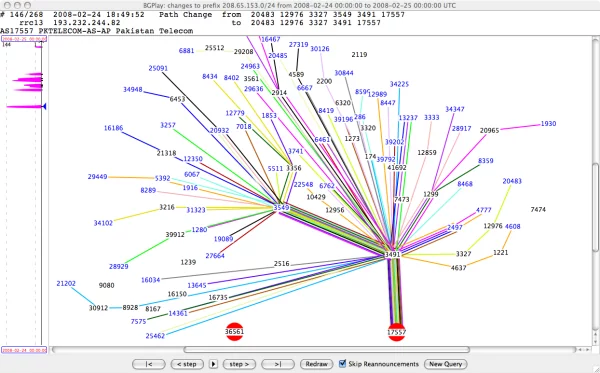

AS 17557 (Pakstan Telecom) announced the route 208.65.153.0/24

YouTube engineers took the first steps – in 1:20 after the problem appeared, they started announcing /24 network. As a result, a part of the audience was able to resume video watching. However, given another metric path in BGP – AS-Path, many users still did not have access. For instance, Pakistan Telecom seemed closer (the neighboring AS) to PCCW, than the YouTube server, located somewhere on the other end of the continent.

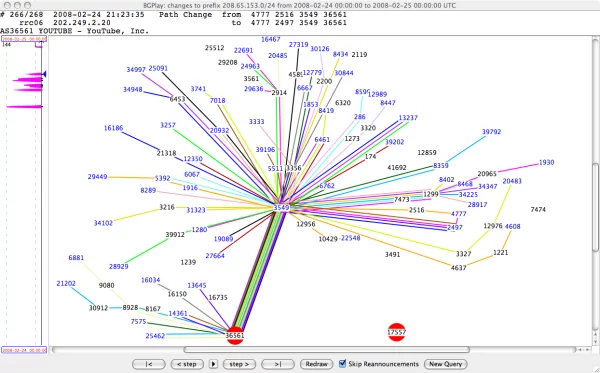

AS 36561 (YouTube) announced route 208.65.153.0/24

In another 10 minutes, they started announcing /25 routes. This could have been a good temporary solution. Nevertheless, these announcements were not propagated, probably due to the fact that many providers were filtering announcements with masks longer than /24. Essentially, only neighboring peers learned /25 routes, which is why the situation has not changed much.

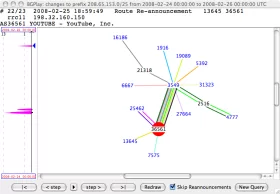

AS 36561 (YouTube) has announced 208.65.153.0/25 and 208.65.153.128/25 routes

After another 20 minutes, Pakistan Telecom itself tried to resolve the issue. First, they tried to solve the problem using the AS-Path Prepend, repeatedly adding their AS number to announcements. This attempt turned out to be futile as it was seen from the new announcements propagated throughout the network. The problem was finally resolved in 2 hours after it appeared, when PCCW banned this network’s announcements and the route was gradually removed from all the routers of the Internet.

Visualization of the incident

A more detailed description of the accident

Google’s May 2005 Outage

It is worth mentioning that a very similar incident with Google has happened three years earlier, on May 7, 2005. This accident is known as “Google’s May 2005 Outage”. According to prevailing opinion, it was caused by incorrect configuration of the DNS servers. However, circumstantial evidence has pointed proactive specialists of that time to indicators, typical for BGP routing problems. They analyzed data on the basis of historical data preserved through RouteViews project.

Results of the analysis showed that at that time Google had two /19 networks and one /22 network. Nonetheless, it has announced 68 blocks of /24. Usually this is done in order to specify traffic route. Many operators have already blocked announcements /25.

However, from May 7 to May 9 route 64.233.161.0/24, owned by Google (AS 15169), was also known from other AS – 174, owned by company called Cogent (unrelated to Google). The majority of autonomous systems, including autonomous systems that were directly connected to autonomous system 15169, chose the new route to the AS 174.

To users, this looked like a redirect from google.com services to other resources, unrelated to Google.

The initiative group that started this investigation (School of Computer Science Carleton University, Ottawa, Canada) contacted Google and obtained useful information. Firstly, Google informed the group about their problems with DNS. Secondly, requests to the top-level domains were redirected to other domains due to the incorrect DNS work. Moreover, Google representatives claimed that they had connection to Cogent, but were indeed experiencing network problems with 64.233.161.0/24 at that time. Finally, Google engineering staff was informed about the BGP vulnerabilities and the possible consequences of such accidents.

Cogent expressed no wish to collaborate and reluctantly provided only the AS-Path involved in the incident. Cogent justified their lack of collaboration on the pretext of a non-disclosure agreement. Thus, the exact cause of the issue in this case could not be established. Nevertheless, there are two possible causes: incorrect configuration and deliberate attack. The group believes the second cause to be the right one, based on several reasons. Detailed reports about the problem can be read here.

Unsuccessful attempt of the Brazilian provider CTBC to announce Full View on its behalf

This is an example of a successful concourse of circumstances.

Provider Companhia de Telecomunicacoes do Brasil Central – CTBC – suddenly decided to distribute Full View to its two peers. This could have lead to another problem of the same scale as the AS7007 incident, collapsing the entire Internet. However, upstream providers that did not pass these announcements further managed to prevent the issue. Moreover, the warning system about the routes leaks (“route leaks” and “route hijacks”) worked perfectly, which is why network operators were quickly informed of the problem. However, this problem has not passed completely unnoticed.

The accident at Yandex

Yandex accident of 2011 cannot be left unnoticed as well. It was caused by Yandex and affected only Yandex. In this case, either due to a software error or to incorrect configuration (which is very likely), for example, redistribution, full BGP table leaked into OSPF database. Correspondingly, when computing the SPF algorithm, the entire device memory leaked, and it failed. Routing information spread through the network and quickly torn down the entire internal Yandex network.

Services were recovered gradually, slowly approaching the source of the problem.

3ve’s BGP hijackers scheme

This is a really interesting story of an ad fraud scheme that relied on hijacking the Border Gateway Protocol:

Members of 3ve (pronounced “eve”) used their large reservoir of trusted IP addresses to conceal a fraud that otherwise would have been easy for advertisers to detect. The scheme employed a thousand servers hosted inside data centers to impersonate real human beings who purportedly “viewed” ads that were hosted on bogus pages run by the scammers themselves — who then received a check from ad networks for these billions of fake ad impressions. Normally, a scam of this magnitude coming from such a small pool of server-hosted bots would have stuck out to defrauded advertisers. To camouflage the scam, 3ve operators funneled the servers’ fraudulent page requests through millions of compromised IP addresses.About one million of those IP addresses belonged to computers, primarily based in the US and the UK, that attackers had infected with botnet software strains known as Boaxxe and Kovter. But at the scale employed by 3ve, not even that number of IP addresses was enough. And that’s where the BGP hijacking came in. The hijacking gave 3ve a nearly limitless supply of high-value IP addresses. Combined with the botnets, the ruse made it seem like millions of real people from some of the most affluent parts of the world were viewing the ads.

BGP hacking — known as “traffic shaping” inside the NSA — has long been a tool of national intelligence agencies. Now it is being used by cybercriminals.

Conclusion

There were a few more major events that did not cause much damage in the Internet, a notable number of medium size incidents and countless small issues with incorrect announcements that never went beyond the BGP peer of the neighboring AS.

What has changed after all these accidents? Providers started filtering announcements from their neighbors and customers. Only final customers’ own subnets were accepted from them, announcements from the equal in rank neighbors were limited by certain criteria. Many vendors incorporated “magical capabilities” into their routers, allowing administrators to tune the BGP. For instance, they are now able to impose limit on announcements that can be obtained from a neighbor before breaking with him and locking the session. Special organizations and services that monitor the Internet arose:

Now, network operators can be notified about any significant incidents in the Internet, if they are related to their prefixes or the AS, as it has happened with CTBC or recent incident with China.

Another branch of development – the development of a new version of BGP, devoid of such a serious drawback. This approach involves verifying that the announcements received from this neighbor, should be indeed received from it. In other words, Apple subnet could hardly be advertised from some provinces of China.

Today, there are two competing realizations: S-BGP (Secure-BGP) and soBGP (secure origin BGP). But that’s another story.

Take care of your BGP!